|

RISA

|

|

RISA

|

This stage transfer a data element from device to host. More...

#include <Template.h>

Public Types | |

| using | hostManagerType = glados::cuda::HostMemoryManager< float, glados::cuda::async_copy_policy > |

| The input data type that needs to fit the output type of the previous stage. More... | |

| using | input_type = glados::Image< glados::cuda::DeviceMemoryManager< float, glados::cuda::async_copy_policy >> |

| The output data type that needs to fit the input type of the following stage. More... | |

| using | output_type = glados::Image< glados::cuda::HostMemoryManager< float, glados::cuda::async_copy_policy >> |

Public Member Functions | |

| Template (const std::string &configFile) | |

| Initializes everything, that needs to be done only once. More... | |

| ~Template () | |

| Destroys everything that is not destroyed automatically. More... | |

| auto | process (input_type &&img) -> void |

| Pushes the sinogram to the processor-threads. More... | |

| auto | wait () -> output_type |

| Takes one sinogram from the output queue results_ and transfers it to the neighbored stage. More... | |

Private Member Functions | |

| auto | processor (const int deviceID) -> void |

| main data processing routine executed in its own thread for each CUDA device, that performs the data processing of this stage More... | |

| auto | readConfig (const std::string &configFile) -> bool |

| Read configuration values from configuration file. More... | |

Private Attributes | |

| std::map< int, glados::Queue< input_type > > | imgs_ |

| one separate input queue for each available CUDA device More... | |

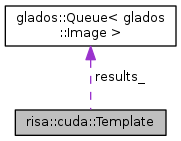

| glados::Queue< output_type > | results_ |

| the output queue in which the processed sinograms are stored More... | |

| std::map< int, std::thread > | processorThreads_ |

| stores the processor()-threads More... | |

| std::map< int, cudaStream_t > | streams_ |

| stores the cudaStreams that are created once More... | |

| unsigned int | memoryPoolIdx_ |

| stores the indeces received when regisitering in MemoryPool More... | |

| int | memPoolSize_ |

| specifies, how many elements are allocated by memory pool More... | |

| int | numberOfDevices_ |

| the number of available CUDA devices in the system More... | |

| int | numberOfPixels_ |

| the number of pixels in one direction in the reconstructed image More... | |

This stage transfer a data element from device to host.

Definition at line 39 of file Template.h.

| using risa::cuda::Template::hostManagerType = glados::cuda::HostMemoryManager<float, glados::cuda::async_copy_policy> |

The input data type that needs to fit the output type of the previous stage.

Definition at line 42 of file Template.h.

| using risa::cuda::Template::input_type = glados::Image<glados::cuda::DeviceMemoryManager<float, glados::cuda::async_copy_policy>> |

The output data type that needs to fit the input type of the following stage.

Definition at line 44 of file Template.h.

| using risa::cuda::Template::output_type = glados::Image<glados::cuda::HostMemoryManager<float, glados::cuda::async_copy_policy>> |

Definition at line 45 of file Template.h.

| risa::cuda::Template::Template | ( | const std::string & | configFile | ) |

Initializes everything, that needs to be done only once.

Runs as many processor-thread as CUDA devices are available in the system. Allocates memory using the MemoryPool.

| [in] | configFile | path to configuration file |

Definition at line 39 of file Template.cu.

| risa::cuda::Template::~Template | ( | ) |

Destroys everything that is not destroyed automatically.

Tells MemoryPool to free the allocated memory. Destroys the cudaStreams.

Definition at line 70 of file Template.cu.

| auto risa::cuda::Template::process | ( | input_type && | img | ) | -> void |

Pushes the sinogram to the processor-threads.

The scheduling for multi-GPU usage is done in this function.

| [in] | sinogram | input data that arrived from previous stage |

Definition at line 80 of file Template.cu.

|

private |

main data processing routine executed in its own thread for each CUDA device, that performs the data processing of this stage

This method takes one image from the input queue imgs_. The image is transfered from device to host using the asynchronous cudaMemcpyAsync()-operation. The resulting host structure is pushed back into the output queue results_.

| [in] | deviceID | specifies on which CUDA device to execute the device functions |

Definition at line 106 of file Template.cu.

|

private |

Read configuration values from configuration file.

All values needed for setting up the class are read from the config file in this function.

| [in] | configFile | path to config file |

| true | configuration options were read successfully |

| false | configuration options could not be read successfully |

Definition at line 134 of file Template.cu.

| auto risa::cuda::Template::wait | ( | ) | -> output_type |

Takes one sinogram from the output queue results_ and transfers it to the neighbored stage.

Definition at line 102 of file Template.cu.

|

private |

one separate input queue for each available CUDA device

Definition at line 81 of file Template.h.

|

private |

stores the indeces received when regisitering in MemoryPool

Definition at line 87 of file Template.h.

|

private |

specifies, how many elements are allocated by memory pool

Definition at line 89 of file Template.h.

|

private |

the number of available CUDA devices in the system

Definition at line 91 of file Template.h.

|

private |

the number of pixels in one direction in the reconstructed image

Definition at line 92 of file Template.h.

|

private |

stores the processor()-threads

Definition at line 84 of file Template.h.

|

private |

the output queue in which the processed sinograms are stored

Definition at line 82 of file Template.h.

|

private |

stores the cudaStreams that are created once

Definition at line 85 of file Template.h.